uqlm: Uncertainty Quantification for Language Models#

Get Started → | View Examples →

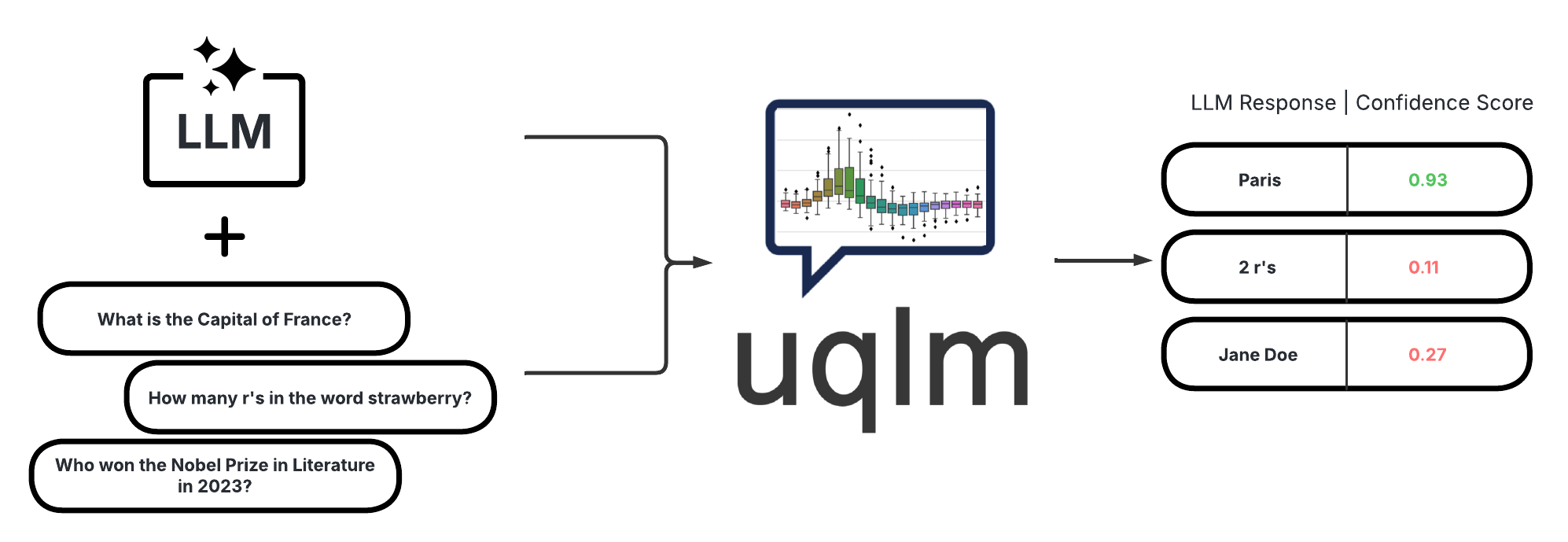

UQLM is a Python library for Large Language Model (LLM) hallucination detection using state-of-the-art uncertainty quantification techniques.

Hallucination Detection#

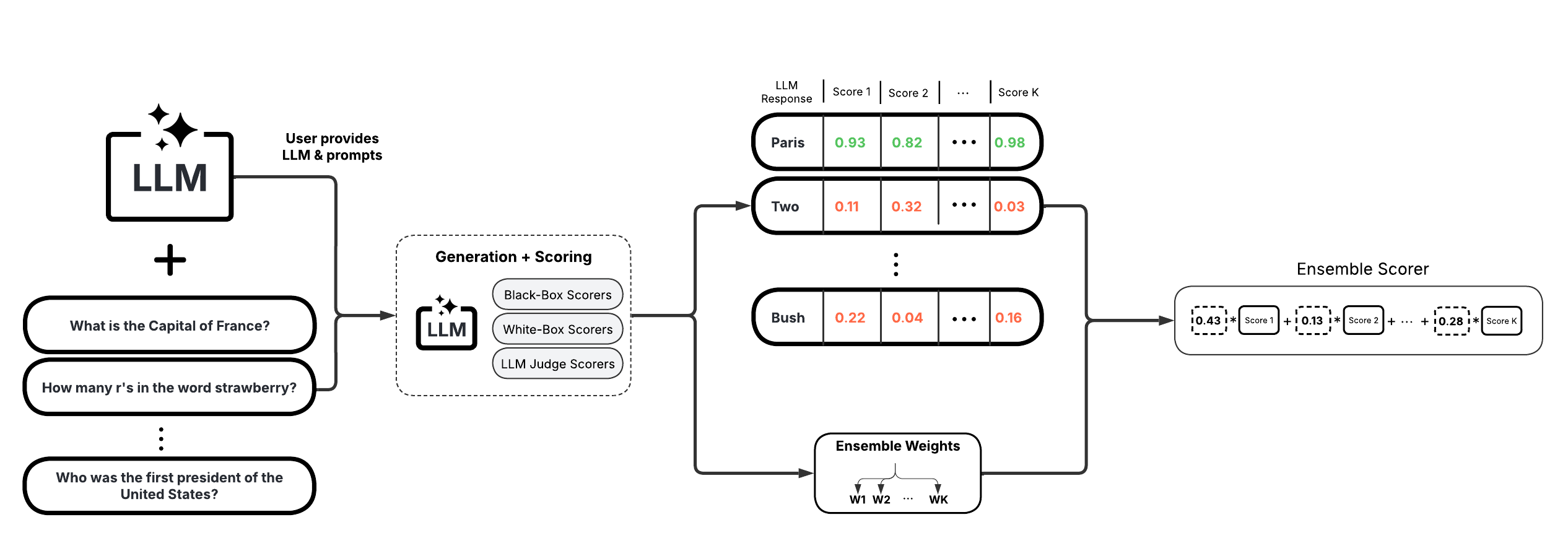

UQLM provides a suite of response-level scorers for quantifying the uncertainty of Large Language Model (LLM) outputs. Each scorer returns a confidence score between 0 and 1, where higher scores indicate a lower likelihood of errors or hallucinations. We categorize these scorers into four main types:

Scorer Type |

Added Latency |

Added Cost |

Compatibility |

Off-the-Shelf / Effort |

|---|---|---|---|---|

⏱️ Medium-High (multiple generations & comparisons) |

💸 High (multiple LLM calls) |

🌍 Universal (works with any LLM) |

✅ Off-the-shelf |

|

⚡ Minimal (token probabilities already returned) |

✔️ None (no extra LLM calls) |

🔒 Limited (requires access to token probabilities) |

✅ Off-the-shelf |

|

⏳ Low-Medium (additional judge calls add latency) |

💵 Low-High (depends on number of judges) |

🌍 Universal (any LLM can serve as judge) |

✅ Off-the-shelf; Can be customized |

|

🔀 Flexible (combines various scorers) |

🔀 Flexible (combines various scorers) |

🔀 Flexible (combines various scorers) |

✅ Off-the-shelf (beginner-friendly); 🛠️ Can be tuned (best for advanced users) |

|

⏱️ High-Very high (multiple generations & claim-level comparisons) |

🔀 💸 High (multiple LLM calls) |

🔀 🌍 Universal |

✅ Off-the-shelf |

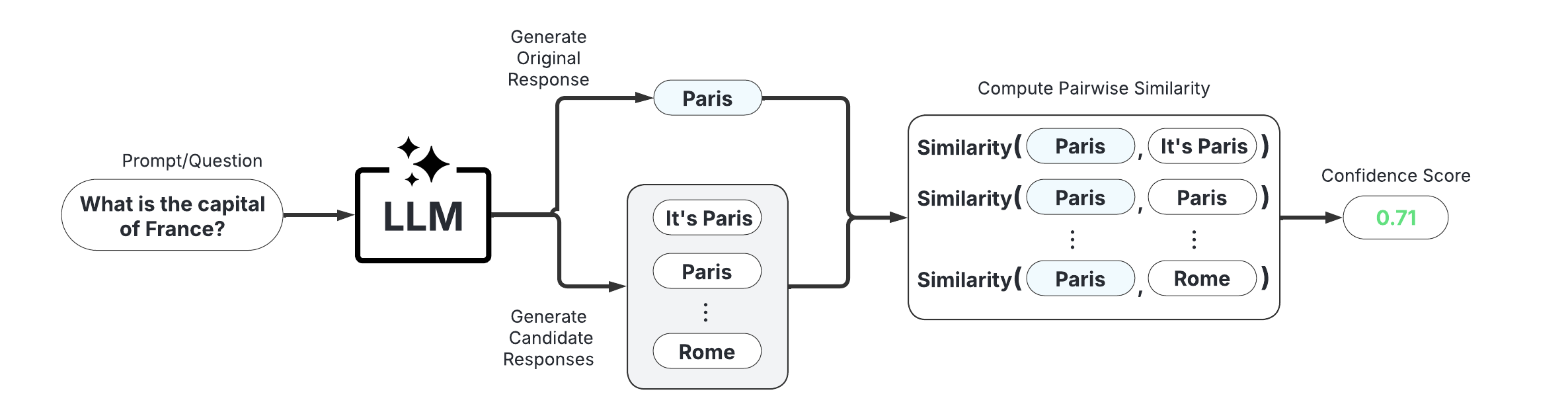

1. Black-Box Scorers (Consistency-Based)#

These scorers assess uncertainty by measuring the consistency of multiple responses generated from the same prompt. They are compatible with any LLM, intuitive to use, and don’t require access to internal model states or token probabilities.

Discrete Semantic Entropy (Farquhar et al., 2024; Kuh et al., 2023)

Number of Semantic Sets (Lin et al., 2024; Vashurin et al., 2025; Kuhn et al., 2023)

Non-Contradiction Probability (Chen & Mueller, 2023; Lin et al., 2025; Manakul et al., 2023)

Entailment Probability (Chen & Mueller, 2023; Lin et al., 2025; Manakul et al., 2023)

Exact Match (Cole et al., 2023; Chen & Mueller, 2023)

BERT-score (Manakul et al., 2023; Zheng et al., 2020)

Cosine Similarity (Shorinwa et al., 2024; HuggingFace)

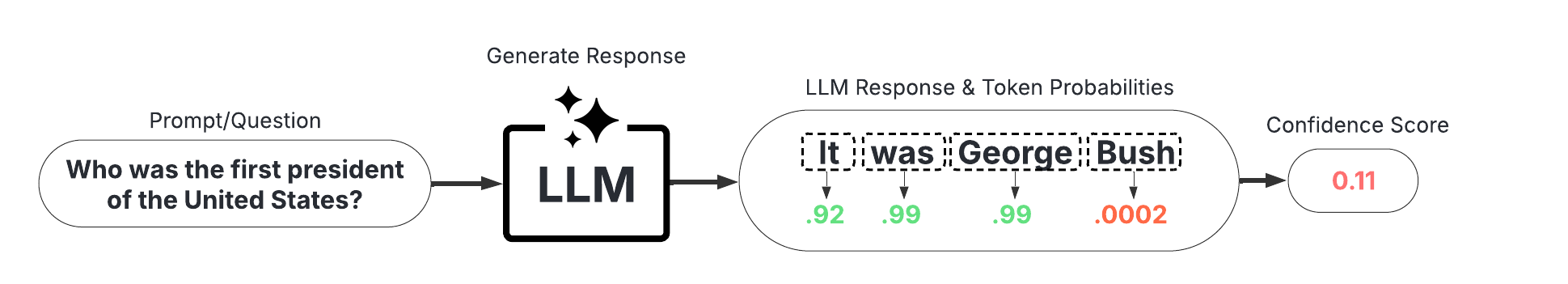

2. White-Box Scorers (Token-Probability-Based)#

These scorers leverage token probabilities to estimate uncertainty. They offer single-generation scoring, which is significantly faster and cheaper than black-box methods, but require access to the LLM’s internal probabilities, meaning they are not necessarily compatible with all LLMs/APIs. The following single-generation scorers are available:

Minimum token probability (Manakul et al., 2023)

Length-Normalized Joint Token Probability (Malinin & Gales, 2021)

Sequence Probability (Vashurin et al., 2024)

Mean Top-K Token Negentropy (Scalena et al., 2025; Manakul et al., 2023)

Min Top-K Token Negentropy (Scalena et al., 2025; Manakul et al., 2023)

Probability Margin (Farr et al., 2024)

UQLM also offers sampling-based white-box methods, which incur higher cost and latency, but tend have superior hallucination detection performance. The following sampling-based white-box scorers are available:

Monte carlo sequence probability (Kuhn et al., 2023)

Consistency and Confidence (CoCoA) (Vashurin et al., 2025)

Semantic Entropy (Farquhar et al., 2024)

Semantic Density (Qiu et al., 2024)

Lastly, the P(True) scorer is offered, which is a self-reflection method that requires one additional generation per response.

P(True) (Kadavath et al., 2022)

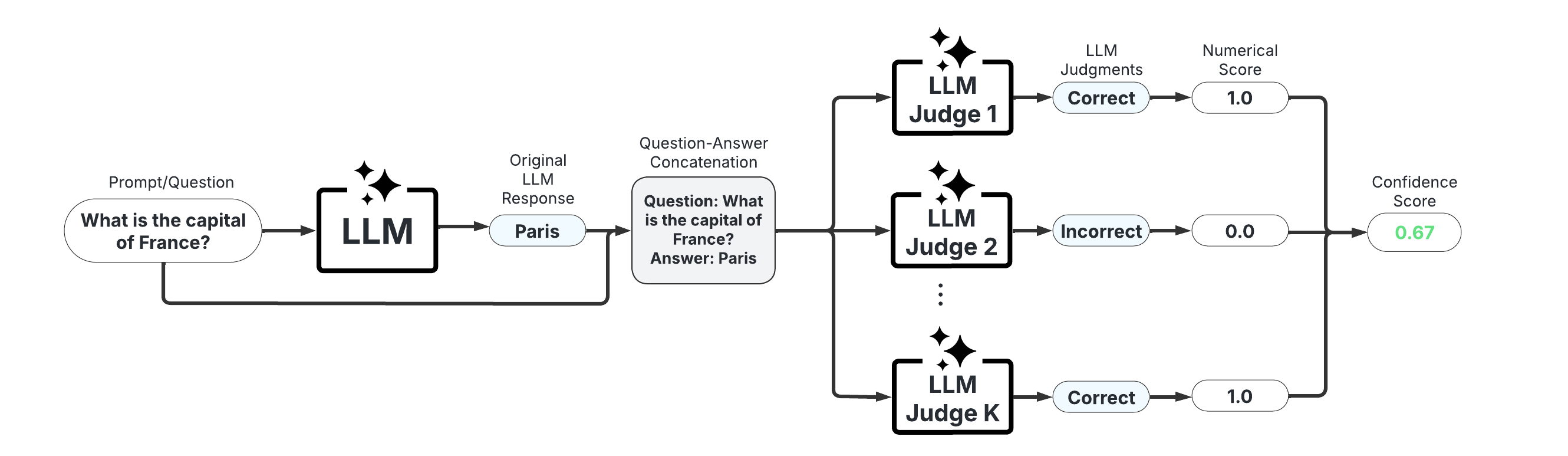

3. LLM-as-a-Judge Scorers#

These scorers use one or more LLMs to evaluate the reliability of the original LLM’s response. They offer high customizability through prompt engineering and the choice of judge LLM(s).

Categorical LLM-as-a-Judge (Manakul et al., 2023; Chen & Mueller, 2023; Luo et al., 2023)

Continuous LLM-as-a-Judge (Xiong et al., 2024)

Likert Scale Scoring (Bai et al., 2023)

Panel of LLM Judges (Verga et al., 2024)

4. Ensemble Scorers#

These scorers leverage a weighted average of multiple individual scorers to provide a more robust uncertainty/confidence estimate. They offer high flexibility and customizability, allowing you to tailor the ensemble to specific use cases.

BS Detector (Chen & Mueller, 2023)

Generalized Ensemble (Bouchard & Chauhan, 2025)

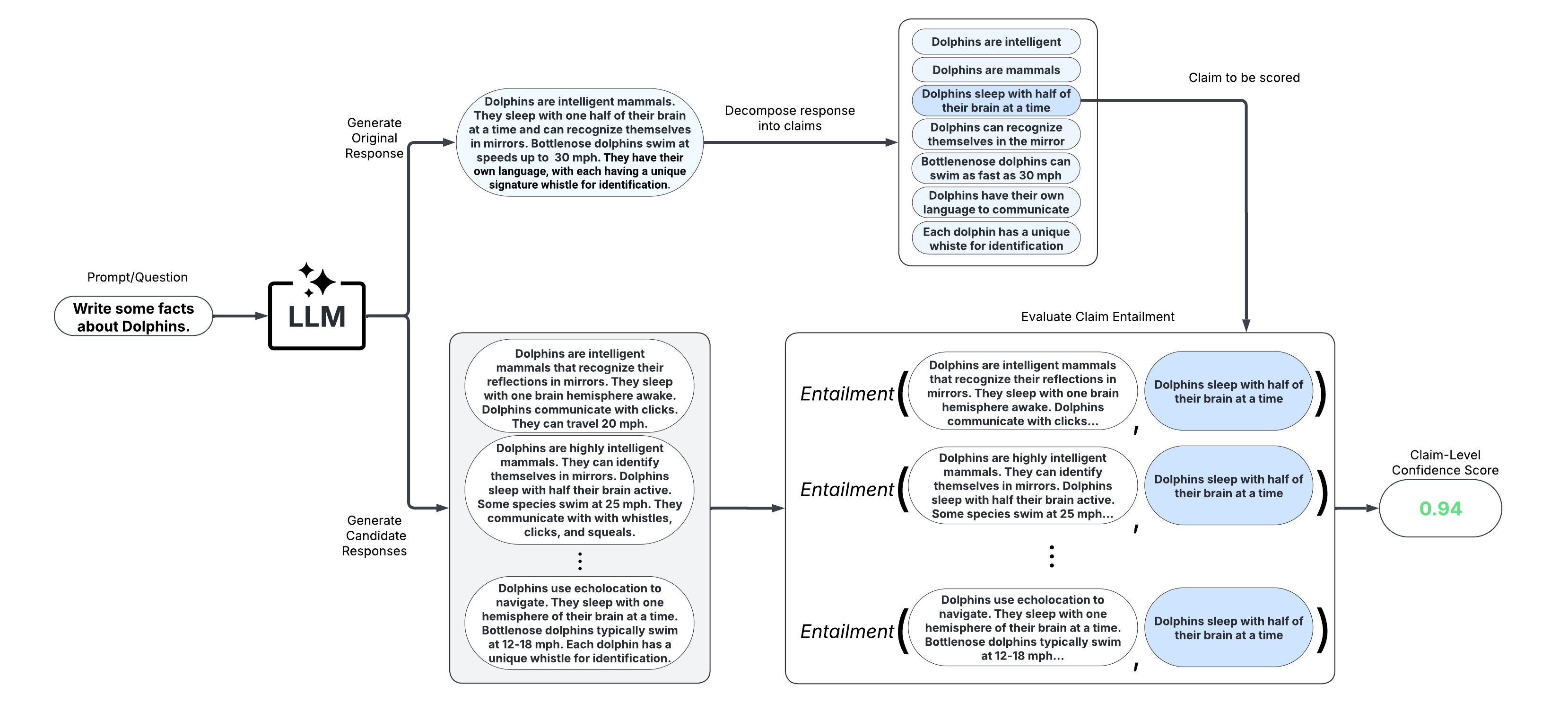

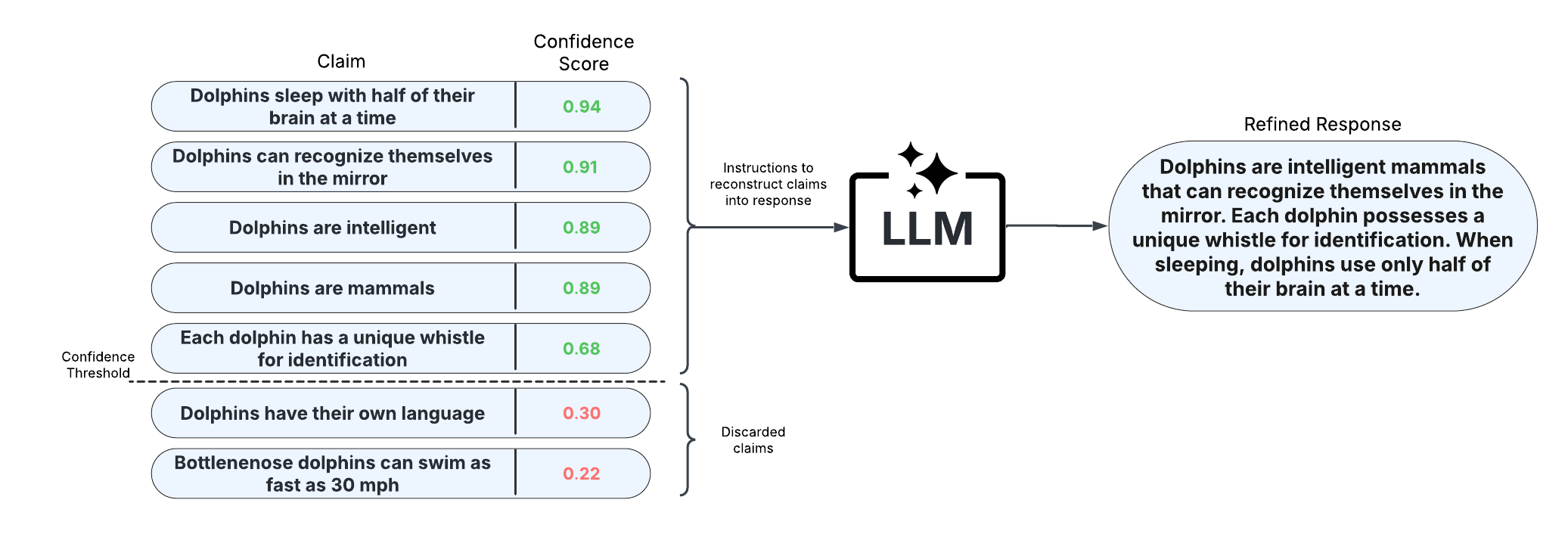

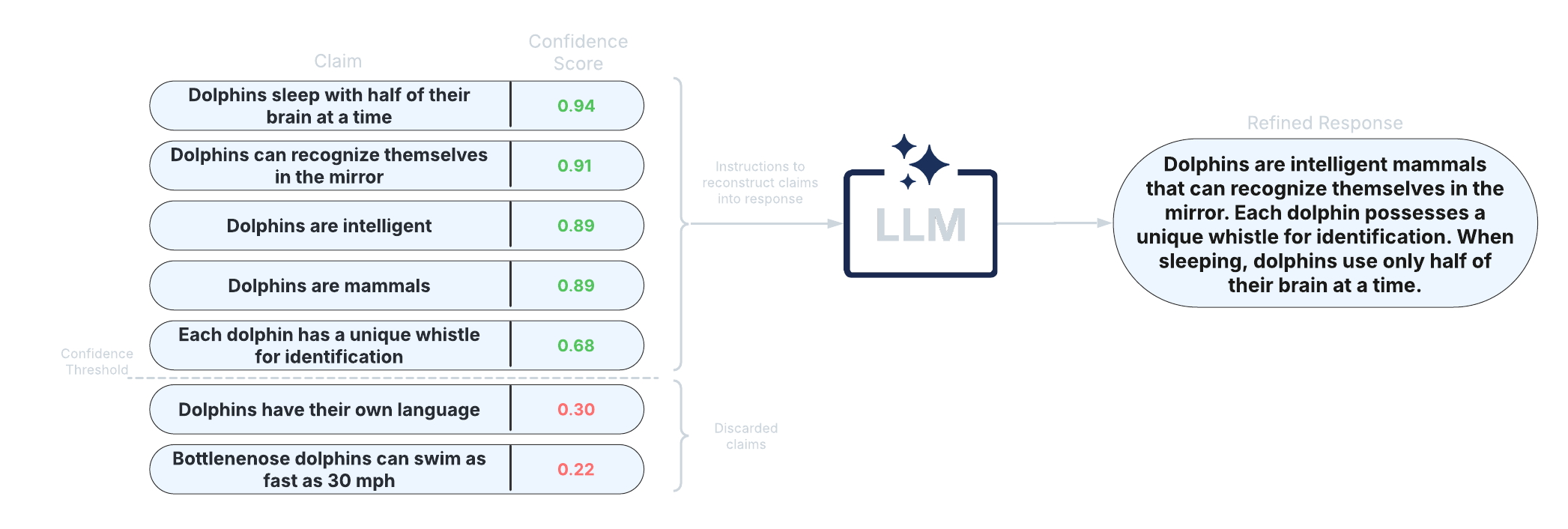

5. Long-Text Scorers (Claim-Level)#

These scorers take a fine-grained approach and score confidence/uncertainty at the claim or sentence level. An extension of black-box scorers, long-text scorers sample multiple responses to the same prompt, decompose the original response into claims or sentences, and evaluate consistency of each original claim/sentence with the sampled responses.

After scoring claims in the response, the response can be refined by removing claims with confidence scores less than a specified threshold and reconstructing the response from the retained claims. This approach allows for improved factual precision of long-text generations.

LUQ scorers (Zhang et al., 2024; Zhang et al., 2025)

Graph-based scorers (Jiang et al., 2024)

Generalized long-form semantic entropy (Farquhar et al., 2024)