🎯 LLM-as-a-Judge#

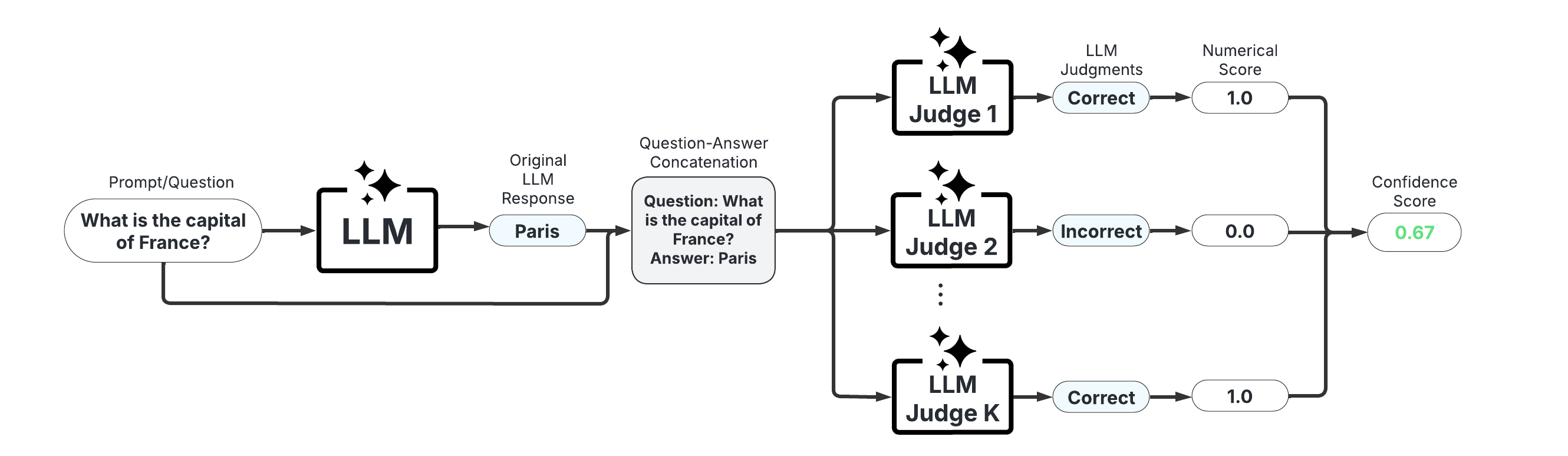

LLM-as-a-Judge scorers use one or more LLMs to evaluate the reliability of the original LLM’s response. They offer high customizability through prompt engineering and the choice of judge LLM(s). Below is a list of the available scorers:

Categorical LLM-as-a-Judge (Manakul et al., 2023; Chen & Mueller, 2023; Luo et al., 2023)

Continuous LLM-as-a-Judge (Xiong et al., 2024)

Likert Scale LLM-as-a-Judge (Bai et al., 2023)

Panel of LLM Judges (Verga et al., 2024)

📊 What You’ll Do in This Demo#

1

Set up LLM and prompts.

Set up LLM instance and load example data prompts.

2

Generate LLM Responses and Confidence Scores

Generate and score LLM responses to the example questions using the LLMPanel() class.

3

Evaluate Hallucination Detection Performance

Compute precision, recall, and F1-score of hallucination detection.

⚖️ Advantages & Limitations#

Pros

Universal Compatibility: Works with any LLM.

Highly Customizable: Use any LLM as a judge and tailor instruction prompts for specific use cases.

Cons

Added cost: Requires additional LLM calls for the judge LLM(s).

[19]:

from uqlm import LLMPanel

from uqlm.utils import load_example_dataset

1. Set up LLM and Prompts#

In this demo, we will illustrate this approach using a set of multiple choice questions from the AI2-ARC benchmark. To implement with your use case, simply replace the example prompts with your data.

[ ]:

# Load example dataset (ai2_arc)

ai2_arc = load_example_dataset("ai2_arc", n=75)

ai2_arc.head()

Loading dataset - ai2_arc...

Processing dataset...

Dataset ready!

| question | answer | |

|---|---|---|

| 0 | Which statement best explains why photosynthes... | A |

| 1 | Which piece of safety equipment is used to kee... | B |

| 2 | Meiosis is a type of cell division in which ge... | D |

| 3 | Which characteristic describes the texture of ... | D |

| 4 | Which best describes the structure of an atom?... | B |

[21]:

# Define prompts

MCQ_INSTRUCTION = "You will be given a multiple choice question. Return only the letter of the response with no additional text or explanation.\n"

prompts = [MCQ_INSTRUCTION + prompt for prompt in ai2_arc.question]

In this example, we use ChatOllama to instantiate our LLMs, but any LangChain Chat Model may be used. Be sure to replace with your LLM of choice.

[6]:

# import sys

# !{sys.executable} -m pip install langchain-ollama

from langchain_ollama import ChatOllama

ollama_llama = ChatOllama(model="llama2")

ollama_mistral = ChatOllama(model="mistral")

ollama_qwen = ChatOllama(model="qwen3")

# ollama_deepseek = ChatOllama(model="deepseek-r1")

[7]:

## Alternative setup with API models

## ChatVertexAI example

# import sys

# !{sys.executable} -m pip install langchain-google-vertexai

# from langchain_google_vertexai import ChatVertexAI

# gemini_pro = ChatVertexAI(model_name="gemini-2.5-pro")

# gemini_flash = ChatVertexAI(model_name="gemini-2.5-flash")

## AzureChatOpenAI example

# import sys

# !{sys.executable} -m pip install langchain-openai

# # User to populate .env file with API credentials

# from dotenv import load_dotenv, find_dotenv

# from langchain_openai import AzureChatOpenAI

# load_dotenv(find_dotenv())

# original_llm = AzureChatOpenAI(

# deployment_name="gpt-4o",

# openai_api_type="azure",

# openai_api_version="2024-02-15-preview",

# temperature=1, # User to set temperature

# )

2. Generate responses and confidence scores#

LLMPanel() - Class for aggregating multiple instances of LLMJudge using average, min, max, or majority voting#

📋 Class Attributes#

Parameter | Type & Default | Description |

|---|---|---|

judges | list of LLMJudge or BaseChatModel | Judges to use. If BaseChatModel, LLMJudge is instantiated using default parameters. |

llm | BaseChatModeldefault=None | A langchain llm |

system_prompt | str or Nonedefault=”You are a helpful assistant.” | Optional argument for user to provide custom system prompt for the LLM. |

max_calls_per_min | intdefault=None | Specifies how many API calls to make per minute to avoid rate limit errors. By default, no limit is specified. |

explanations | booldefault=False | Whether to include explanations from judges alongside scores. When True, judges provide reasoning for their scores. |

additional_context | str or Nonedefault=None | Optional argument to provide additional context to inform LLM-as-a-Judge evaluations. |

🔍 Parameter Groups#

🧠 LLM-Specific

llm

system_prompt

📊 Confidence Scores

judges

explanations

additional_context

⚡ Performance

max_calls_per_min

💻 Usage Examples#

# Basic usage with single self-judge parameters

panel = LLMPanel(llm=llm, judges=[llm])

# Using two judges with default parameters

panel = LLMPanel(llm=llm, judges=[llm, llm2])

# Using two judges with default parameters

panel = LLMPanel(llm=llm, judges=[llm, llm2])

# Using judges with explanations enabled

panel_with_explanations = LLMPanel(

llm=llm, judges=[llm, llm2], explanations=True

)

[23]:

judges = [ollama_mistral, ollama_llama, ollama_qwen]

additional_context = "You are an expert in general-knowledge reasoning questions. Your task is to evaluate the correctness of the proposed answers to the provided questions."

# Option 1: With explanations

panel = LLMPanel(llm=ollama_mistral, judges=judges, additional_context=additional_context, explanations=True)

# Option 2: Without explanations

# panel = LLMPanel(llm=ollama_mistral, judges=judges, additional_context=additional_context))

🔄 Class Methods#

Method | Description & Parameters |

|---|---|

LLMPanel.generate_and_score | Generate responses to provided prompts and use panel to of judges to score responses for correctness. Parameters:

Returns: UQResult containing data (prompts, responses, sampled responses, and confidence scores) and metadata 💡 Best For: Complete end-to-end uncertainty quantification when starting with prompts. |

LLMPanel.score | Use panel to of judges to score provided responses for correctness. Use if responses are already generated. Otherwise, use Parameters:

Returns: UQResult containing data (responses and confidence scores) and metadata 💡 Best For: Computing uncertainty scores when responses are already generated elsewhere. |

[24]:

result = await panel.generate_and_score(prompts=prompts)

# option 2: provide pre-generated responses with score method

# result = await panel.score(prompts=prompts, responses=responses)

[29]:

result_df = result.to_df()

result_df.head()

[29]:

| prompt | response | judge_1 | judge_1_explanation | judge_2 | judge_2_explanation | judge_3 | judge_3_explanation | avg | max | min | median | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | You will be given a multiple choice question. ... | A) Sunlight is the source of energy for nearl... | 1.0 | No explanation provided | 1.0 | The answer correctly identifies that sunlight ... | 1.0 | Photosynthesis is foundational because it conv... | 1.000000 | 1.0 | 1.0 | 1.0 |

| 1 | You will be given a multiple choice question. ... | C) breathing mask | 1.0 | No explanation provided | 1.0 | The breathing mask is used to filter air and p... | 0.0 | The breathing mask (B) is designed to filter a... | 0.666667 | 1.0 | 0.0 | 1.0 |

| 2 | You will be given a multiple choice question. ... | D) ovary cells | NaN | The question specifically states that meiosis ... | 1.0 | Meiosis occurs in germ cells (sex cells) of or... | 1.0 | Meiosis occurs in germ cells to produce haploi... | NaN | NaN | NaN | NaN |

| 3 | You will be given a multiple choice question. ... | D | 1.0 | No explanation provided | 1.0 | The question asks for the texture of a kitten'... | 1.0 | The question asks about the texture of a kitte... | 1.000000 | 1.0 | 1.0 | 1.0 |

| 4 | You will be given a multiple choice question. ... | C) a network of interacting positive and nega... | 0.5 | While the answer "a network of interacting pos... | 1.0 | The structure of an atom is indeed a network o... | 0.0 | The correct structure of an atom is a nucleus ... | 0.500000 | 1.0 | 0.0 | 0.5 |

3. Evaluate Hallucination Detection Performance#

To evaluate hallucination detection performance, we ‘grade’ the responses against an answer key. Note the check_letter_match function is specific to our task (multiple choice). If you are using your own prompts/questions, update the grading method accordingly.

[30]:

# Populate correct answers and grade responses

result_df["answer"] = ai2_arc.answer

def check_letter_match(response: str, answer: str):

return response.strip().lower()[0] == answer[0].lower()

result_df["response_correct"] = [check_letter_match(r, a) for r, a in zip(result_df["response"], ai2_arc["answer"])]

result_df.head()

[30]:

| prompt | response | judge_1 | judge_1_explanation | judge_2 | judge_2_explanation | judge_3 | judge_3_explanation | avg | max | min | median | answer | response_correct | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | You will be given a multiple choice question. ... | A) Sunlight is the source of energy for nearl... | 1.0 | No explanation provided | 1.0 | The answer correctly identifies that sunlight ... | 1.0 | Photosynthesis is foundational because it conv... | 1.000000 | 1.0 | 1.0 | 1.0 | A | True |

| 1 | You will be given a multiple choice question. ... | C) breathing mask | 1.0 | No explanation provided | 1.0 | The breathing mask is used to filter air and p... | 0.0 | The breathing mask (B) is designed to filter a... | 0.666667 | 1.0 | 0.0 | 1.0 | B | False |

| 2 | You will be given a multiple choice question. ... | D) ovary cells | NaN | The question specifically states that meiosis ... | 1.0 | Meiosis occurs in germ cells (sex cells) of or... | 1.0 | Meiosis occurs in germ cells to produce haploi... | NaN | NaN | NaN | NaN | D | True |

| 3 | You will be given a multiple choice question. ... | D | 1.0 | No explanation provided | 1.0 | The question asks for the texture of a kitten'... | 1.0 | The question asks about the texture of a kitte... | 1.000000 | 1.0 | 1.0 | 1.0 | D | True |

| 4 | You will be given a multiple choice question. ... | C) a network of interacting positive and nega... | 0.5 | While the answer "a network of interacting pos... | 1.0 | The structure of an atom is indeed a network o... | 0.0 | The correct structure of an atom is a nucleus ... | 0.500000 | 1.0 | 0.0 | 0.5 | B | False |

[31]:

# evaluate precision, recall, and f1-score of Semantic Entropy's predictions of correctness

from sklearn.metrics import precision_score, recall_score, f1_score

for ind in [1, 2, 3]:

y_pred = [(s > 0) * 1 for s in result_df[f"judge_{str(ind)}"]]

y_true = result_df.response_correct

print(f"Judge {ind} precision: {precision_score(y_true=y_true, y_pred=y_pred)}")

print(f"Judge {ind} recall: {recall_score(y_true=y_true, y_pred=y_pred)}")

print(f"Judge {ind} f1-score: {f1_score(y_true=y_true, y_pred=y_pred)}")

print(" ")

Judge 1 precision: 0.7083333333333334

Judge 1 recall: 0.6938775510204082

Judge 1 f1-score: 0.7010309278350515

Judge 2 precision: 0.6712328767123288

Judge 2 recall: 1.0

Judge 2 f1-score: 0.8032786885245902

Judge 3 precision: 0.9074074074074074

Judge 3 recall: 1.0

Judge 3 f1-score: 0.9514563106796117

4. Scorer Definitions#

Under the LLM-as-a-Judge approach, either the same LLM that was used for generating the original responses or a different LLM is asked to form a judgment about a pre-generated response. Below, we define two LLM-as-a-Judge scorer templates. ### Ternary Judge Template (true_false_uncertain) We follow the approach proposed by Chen & Mueller, 2023 in which an LLM is instructed to score a question-response concatenation as either incorrect, uncertain, or

correct using a carefully constructed prompt. These categories are respectively mapped to numerical scores of 0, 0.5, and 1. We denote the LLM-as-a-judge scorers as \(J: \mathcal{Y} \xrightarrow[]{} \{0, 0.5, 1\}\). Formally, we can write this scorer function as follows:

:nbsphinx-math:`begin{equation} J(y_i) = begin{cases}

0 & text{LLM states response is incorrect} \ 0.5 & text{LLM states that it is uncertain} \ 1 & text{LLM states response is correct}.

end{cases} end{equation}`

Binary Judge Template (true_false)#

We modify the ternary approach above and include only two categories: Correct or Incorrect, which respectively map to 1 and 0.

Continuous Judge Template (continuous)#

For the continuous template, the LLM is asked to directly score a question-response concatenation’s correctness on a scale of 0 to 1.

Likert-Scale Judge Template (likert)#

Here the judge is asked to score a question-response concatenation on a 5-point likert scale. We convert these likert scores to a [0,1] scale as follows:

:nbsphinx-math:`begin{equation} J(y_i) = begin{cases}

0 & text{LLM states response is completely incorrect} \ 0.25 & text{LLM states that it is mostly incorrect} \ 0.5 & text{LLM states that it is partially correct} \ 0.75 & text{LLM states that it is mostly correct} \ 0.1 & text{LLM states that it is completely correct}

end{cases} end{equation}`

© 2025 CVS Health and/or one of its affiliates. All rights reserved.

[ ]: