🎯 Multimodal Uncertainty Quantification#

The UQLM library offers Uncertainty Quantification (UQ) methods for multimodal inputs, given that outputs are text-based. This demo provides an minimal illustration of how to use uqlm scorers for multimodal inputs. The following scorers offer multimodal compatibility:

Black-Box (Consistency) Scorers#

Discrete Semantic Entropy (Farquhar et al., 2024; Kuhn et al., 2023)

Number of Semantic Sets (Lin et al., 2024; Vashurin et al., 2025; Kuhn et al., 2023)

Non-Contradiction Probability (Chen & Mueller, 2023; Lin et al., 2024; Manakul et al., 2023)

Entailment Probability (Lin et al., 2025; Chen & Mueller, 2023)

Exact Match (Cole et al., 2023; Chen & Mueller, 2023)

BERTScore (Manakul et al., 2023; Zheng et al., 2020)

Normalized Cosine Similarity (Shorinwa et al., 2024; HuggingFace)

White-Box (Token-Probability-Based) Scorers#

Minimum token probability (Manakul et al., 2023)

Length-Normalized Sequence Probability (Malinin & Gales, 2021)

Sequence Probability (Vashurin et al., 2024)

Mean Top-K Token Negentropy (Scalena et al., 2025; Manakul et al., 2023)

Min Top-K Token Negentropy (Scalena et al., 2025; Manakul et al., 2023)

Probability Margin (Farr et al., 2024)

Monte carlo sequence probability (Kuhn et al., 2023)

Consistency and Confidence (CoCoA) (Vashurin et al., 2025)

Semantic Negentropy (Farquhar et al., 2024)

Semantic Density (Qiu et al., 2024)

📊 What You’ll Do in This Demo#

1

Set up LLM and prompts.

Set up LLM instance and load example image-based data prompts.

2

Generate LLM Responses and Confidence Scores

Generate and score LLM responses to the example image-based questions using the BlackBoxUQ() class.

3

Evaluate Hallucination Detection Performance

Inspect which responses were correct/incorrect and compare to computed confidence scores.

⚖️ Advantages & Limitations#

Pros

Broad Scorer Compatibility: Works with broad spectrum of black-box UQ and white-box scorers

Intuitive: Easy to understand and implement

Cons

Limited model compatibility: Requires that the provided LLM be compatibile with multimodal inputs

Beta Mode: Currently in beta mode with ongoing testing

## 1. Set up LLM and Prompts

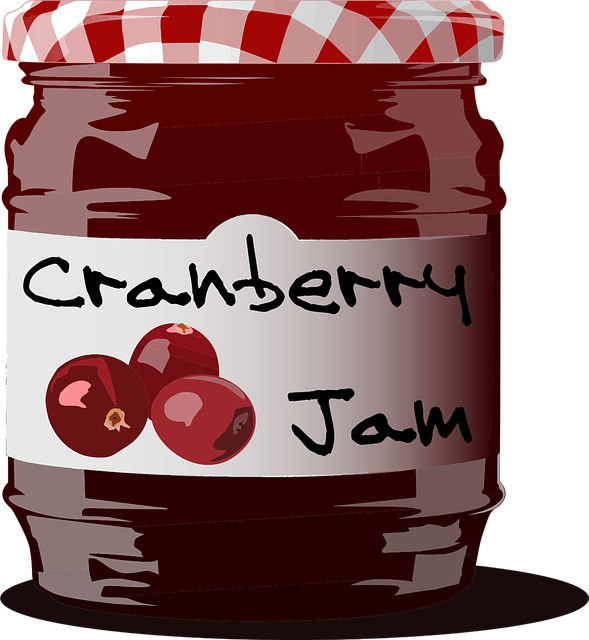

In this demo, we will illustrate multimodal functionality using questions based on a single image. To implement with your use case, simply replace the example prompts with your data.

First, let’s load and preview the image.

[1]:

import base64

from IPython.display import Image

image_path = "../assets/images/cranberry_jam.png"

# Encode image

with open(image_path, "rb") as file:

png_bytes = file.read()

encoded_png = base64.b64encode(png_bytes).decode("utf-8")

Image(image_path, width=200, height=150)

[1]:

Next, we construct our image-based prompts using LangChain’s HumanMessage class. We will have five prompts that are based on questions about the image.

[2]:

from langchain_core.messages import HumanMessage

questions = ["How many berries appear in the image", "What color are the berries?", "How many times does the letter R appear in this image?", "How many times does the letter P appear in this image", "How many words appear in this image?"]

prompts = []

for question in questions:

prompt = HumanMessage(content=[{"type": "text", "text": question}, {"type": "file", "source_type": "base64", "mime_type": "image/png", "data": encoded_png}])

prompts.append([prompt])

In this example, we use ChatVertexAI to instantiate our LLM, but any LangChain Chat Model may be used. Be sure to replace with your LLM of choice.

[3]:

# import sys

# !{sys.executable} -m pip install langchain-google-vertexai

from langchain_google_vertexai import ChatVertexAI

llm = ChatVertexAI(model="gemini-2.5-flash-lite", temperature=1.0)

We can do a quick check to ensure that our formatted HumanMessage objects are compatible with our LLM.

[4]:

# Assert that our constructed HumanMessage instances are compatible with our LLM:

llm.invoke(prompts[0])

[4]:

AIMessage(content='3', additional_kwargs={}, response_metadata={'is_blocked': False, 'safety_ratings': [], 'usage_metadata': {'prompt_token_count': 1297, 'candidates_token_count': 1, 'total_token_count': 1298, 'prompt_tokens_details': [{'modality': 2, 'token_count': 1290}, {'modality': 1, 'token_count': 7}], 'candidates_tokens_details': [{'modality': 1, 'token_count': 1}], 'thoughts_token_count': 0, 'cached_content_token_count': 0, 'cache_tokens_details': []}, 'finish_reason': 'STOP', 'avg_logprobs': -0.2790873348712921, 'model_name': 'gemini-2.5-flash-lite'}, id='run--d226ad3b-0fb8-4c07-a200-312ff56831ec-0', usage_metadata={'input_tokens': 1297, 'output_tokens': 1, 'total_tokens': 1298, 'input_token_details': {'cache_read': 0}})

## 2. Generate LLM Responses and Confidence Scores

[5]:

import torch

# Set the torch device

if torch.cuda.is_available(): # NVIDIA GPU

device = torch.device("cuda")

elif torch.backends.mps.is_available(): # macOS

device = torch.device("mps")

else:

device = torch.device("cpu") # CPU

print(f"Using {device.type} device")

Using cuda device

In this demo, we use black-box scoring with the BlackBoxUQ class. A similar approach may be implemented with the WhiteBoxUQ, SemanticEntropy, or UQEnsemble classes. Importantly, multimodal functionality is not offered for LLM judges, including LLMPanel class and judge components of UQEnsemble. For more details on implementations of BlackBoxUQ, WhiteBoxUQ, SemanticEntropy, or UQEnsemble, refer to the respective demo notebooks.

[6]:

from uqlm import BlackBoxUQ

bbuq = BlackBoxUQ(llm=llm, scorers=["noncontradiction"], system_prompt="Answer as concisely as possible.", device=device, use_best=False)

result = await bbuq.generate_and_score(prompts=prompts, num_responses=5)

/home/jupyter/uqlm/uqlm/utils/response_generator.py:105: UQLMBetaWarning: Use of BaseMessage in prompts argument is

in beta. Please use it with caution as it may change in future releases.

beta_warning("Use of BaseMessage in prompts argument is in beta. Please use it with caution as it may change in

future releases.")

/home/jupyter/uqlm/uqlm/utils/response_generator.py:105: UQLMBetaWarning: Use of BaseMessage in prompts argument is

in beta. Please use it with caution as it may change in future releases.

beta_warning("Use of BaseMessage in prompts argument is in beta. Please use it with caution as it may change in

future releases.")

## 3. Evaluate Hallucination Detection Performance

Finally, we can check which questions the LLM answered correctly and compare to our UQLM’s black-box confidence scores.

[19]:

import pandas as pd

pd.set_option("display.max_colwidth", 100)

result_df = result.to_df()

result_df["question"] = questions

result_df[["question", "response", "sampled_responses", "noncontradiction"]]

[19]:

| question | response | sampled_responses | noncontradiction | |

|---|---|---|---|---|

| 0 | How many berries appear in the image | 3 | [3, 3, 3, 3, 3] | 1.000000 |

| 1 | What color are the berries? | The berries are red. | [Red., The berries are dark red., Red., The berries are a deep red color., The berries are dark ... | 0.998269 |

| 2 | How many times does the letter R appear in this image? | 3 | [3, 3, 3, 3, 3] | 1.000000 |

| 3 | How many times does the letter P appear in this image | 1 | [2, 2, 2, 2, 1] | 0.221604 |

| 4 | How many words appear in this image? | 2 | [2, 2, 2, 2, 2] | 1.000000 |

We can see that for the four questions with high confidence scores (the noncontradiction colunn), the responses are all correct, and for the question with low confidence (How many times does the letter P appear in this image?), the response is not correct.